All sixty-two of the Rising students who sat this year’s National Primary School Examination (NPSE) in Sierra Leone have passed. This 100% pass rate compares to a national average of 77.6%.

The students’ achievement is all the more remarkable given that they were kept out of school for 6 months last year because of the COVID-19 pandemic.

Not only did they pass, but many achieved outstanding aggregate scores. 57 students (94% of the total) achieved an aggregate score of 289 and above, compared to 10% of students nationally. 10 students (16% of the total) achieved aggregate scores of 313 and above, compared to 1% nationally.

The students, from all four of our primary schools in Sierra Leone, are the first Rising students to sit these exams since we began offering the primary grades. They will now be able to progress to the Junior Secondary phase and with scores like these should have their pick of schools - though naturally we hope they’ll all stay with Rising!

In its official report announcing the results, the Ministry of Basic and Senior Secondary Education also paid tribute to partners (like Rising) who had contributed to radio teaching programmes locally and nationally during and after the pandemic-induced school closures, noting that "without their effort overall performance may not have been as good."

Congratulations to the students, their teachers and school leaders, as well as to our head office team, for this accomplishment. We’re so proud of them and delighted that all their hard work in such difficult circumstances has paid off.

Positive early gains for Partnership Schools and Rising

‘Gold standard’ evaluation finds positive early gains for Partnership Schools and for Rising.

The evaluation team behind the Randomised Controlled Trial (RCT) of Partnership Schools for Liberia (PSL - okay, that’s enough three letter abbreviations) has just released their midline report. The report covers just the first year of PSL (September 2016-July 2017). A final, endline report covering the full three years of the PSL pilot is due in 2019.

While much anticipated, this is only a midline report with preliminary results from one year of a three year programme. The report therefore strikes a cautious tone and the evaluation team are careful to caveat their results.

Nevertheless, there are important and encouraging early messages for PSL as a whole and for Rising in particular. Put simply, the PSL programme is delivering significant learning gains, and Rising seems to be delivering among the largest gains of any school operator.

For PSL as a whole, the headline result is that PSL schools improved learning outcomes by 60% more than in control schools, or put differently, the equivalent of 0.6 extra years of schooling.

These gains seem to be driven by better management of schools by PSL operators, with longer school days, closer supervision of staff and more on-task teaching resulting in pupils in PSL schools getting about twice as much instructional time as in control schools. PSL schools also benefited from having more money and having better quality teachers, particularly new graduates from the Rural Teacher Training Institutes. But the report is clear that, based on their data and the wider literature, it is the interaction of these additional resources and better management that makes the difference; more resources alone is not enough. (Anecdotally, I would add that our ability to attract these new teachers was at least in part because they had more confidence in how they would be managed, which illustrates the point that new resources and different management are not easily separated.)

Rising Results

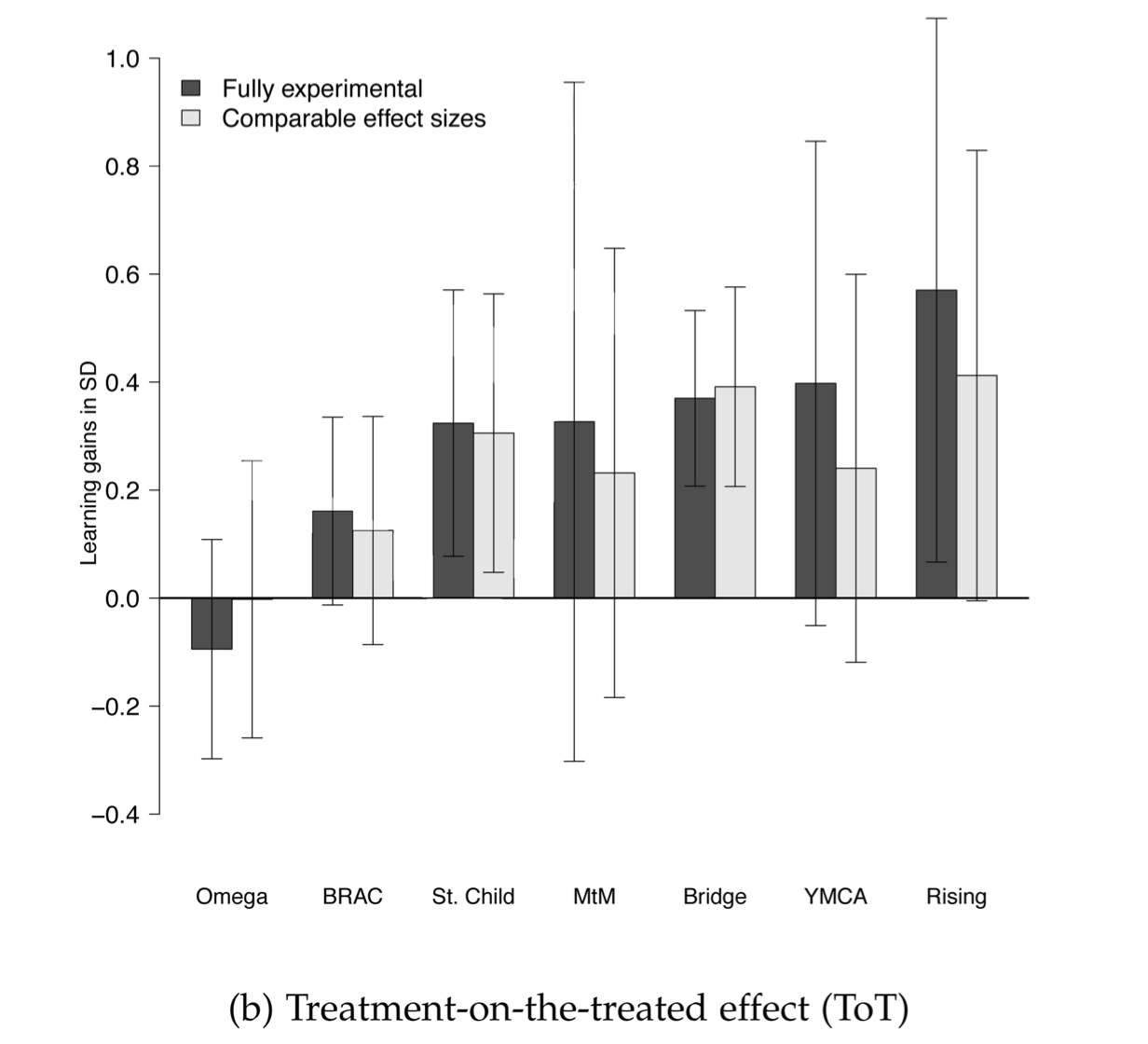

The report also looks at how performance varies across the 8 operators that are part of PSL. Even more than the overall findings, the discussion of operator performance is limited by the small samples of students the evaluation team drew from each school. For operators (like Rising) operating only a small number of schools, this means there is considerable uncertainty around the evaluators’ estimates. That said, the evaluation team do their best to offer some insights.

Their core estimate is that compared to its control schools. Rising improved learning outcomes by 0.41 standard deviations or around 1.3 additional years of schooling. This is the highest of any of the operators, though it is important to note the overlapping confidence intervals between several of the higher performing providers.

However, this core estimate is what’s known as an “intent-to-treat” or ITT estimate. It is based on the 5 schools that were originally randomly assigned to Rising. But we only actually ended up working in 4 of those (* see below). The ITT estimate is therefore a composite of results from 4 schools that we operated and 1 school that we never set foot in. A better estimate of our true impact is arguably offered by looking at our impact just on those students in schools we actually ended up working in. This “treatment on the treated” or TOT estimate is considerably higher, with a treatment effect of 0.57 standard deviations or 1.8 extra years of schooling. This, again, is the highest of any operator, and by a considerably larger margin, though again the confidence intervals around the estimate are large.

Whether the ITT or TOT estimate is the more useful depends, in my view, on the policy question you are trying to answer. At the level of the programme as a whole, where the policy question is essentially "what will the overall effect of a programme like this be?”, the ITT estimate seems the more useful because it is fair to assume that some level of ’non-compliance’ will occur and the programme won’t get implemented in all schools. But at the inter-operator level, where the salient policy question is “given that this is going to be a PSL school, what will be the impact of giving this school to operator X rather than operator Y?”, the TOT estimate seems more informative because it is based solely on results in schools where those operators were actually working.

A further complication in comparing across operators is that operators have different sample sizes, pulled from different populations of students across different geographical areas. It cannot be assumed that we are comparing like with like. To correct for this, the evaluators control for observable differences in school and student characteristics (e.g. by using proxies for their income status, geographic remoteness etc), but they also use a fancy statistical technique called 'Bayesian hierarchical modelling'. Essentially, this assumes that because we are part of the same programme in the same country, operator effects are likely to be correlated. It therefore dilutes the experimental estimate for Rising by making it a weighted average of Rising's actual performance and the average performance of all the operators. It turns out that adjusting for baseline characteristics doesn’t make too much difference (particularly for Rising, since our schools were more typical), but this Bayesian adjustment does. It drags Rising back towards the mean for all operators, with the amount we are dragged down larger because our sample size is smaller. We still end up with the first or second largest effect depending on which of the ITT or TOT estimate is used, but by design we are closer to the rest of the pack.

Some reflections on the results

So what do we make of these results?

First of all, we are strongly committed to the highest levels of rigour and transparency about our impact. We had thought that the study wouldn’t be able to say anything specific about Rising at all for technical reasons to do with the research design (for nerdier readers: it was originally designed to detect differences between PSL schools and non-PSL schools, and was under-powered to detect differences among operators within PSL). We're glad the evaluation team were able to find some ways to overcome those limitations.

Second, it is interesting and encouraging that the results largely confirm the strong progress we had been seeing in our internal data. Those data looked promising, but absent a control group to provide a robust counterfactual, it was impossible to know for sure that the progress we were seeing was directly attributable to us. As we said at the time and as the evaluation team note in an appendix to this report, our internal data were for internal management purposes and were never meant to have the same rigour as the RCT. But as it turns out, our internal data and the RCT data are pretty consistent. Our internal data suggested that students had made approximately 3 grades' worth of progress in one academic year; the TOT estimate in the RCT is that they had made approximately 2.8 grades’ worth of progress in one academic year. Needless to say, knowing that we can have a good amount of conviction in what our internal data are telling us is very important from a management point of view.

Third, while making direct comparisons between operators is tricky for the reasons noted above, on any reasonable reading of this evidence Rising emerges as one of the stronger operators, and this result validates the decision by the Ministry of Education to allocate 24 new schools to Rising in Year 2. In both absolute and relative terms, this was one of the larger school allocations and reflected the Ministry’s view that Rising was one of the highest performing PSL operators in Year 1. It is good - not just for us but for the principle of accountability underlying the PSL programme as a whole - that the RCT data confirm the MoE’s positive assessment of Rising’s performance.

Taking up the challenge

I also want to be very clear about the limitations of the data at this stage. It is not just that it’s very early to be saying anything definitive. It’s also that these data do not yet allow Rising, or really any operator, to fully address two of the big challenges that have been posed by critics of PSL.

The first challenge is around cost. As the evaluators point out, different operators spent different amounts of money in Year 1, and all spent more money than would be typically made available in a government school. In the end, judgments about the success of PSL or individual operators within it will need to include some assessment not just of impact but of value for money. PSL can only be fully scaled if it can be shown to be effective and affordable. Rising was one of those operators whose unit costs were relatively high in Year 1. That’s because a big part of our costs is the people and the systems in our central team and with just 5 schools in year 1, we had few economies of scale. These costs should fall precipitously once they start to be shared over a much larger number of schools and students. But that’s a testable hypothesis on which the Ministry can hold us to account. In Year 2, we need to prove to them that we can deliver the same or better results at a significantly lower cost per student.

The second challenge is around representativeness. One criticism that has been aired is that Year 1 schools were the low hanging fruit. As the evaluation makes clear, it is simply not true that Year 1 schools were somehow cushy, but it is true that Year 1 schools were generally in easier to serve, somewhat less disadvantaged communities than the median Liberian school. And that’s precisely why the Ministry of Education insisted that the schools we and other operators will be serving in Year 2 be disproportionately located in the South East of Liberia, where those concerns about unrepresentativeness do not apply. If we can continue to perform well in these more challenging contexts, it will go some way to answering the question of whether PSL can genuinely become part of the solution for the whole of Liberia.

In short, the RCT midline provides a welcome confirmation of what our own data were telling us about the positive impact we are having. Our task for the coming academic year is to show that we can sustain and deepen that impact, in more challenging contexts, and more cost effectively. A big task, but one that we are hugely excited and honoured to be taking on.

A little over a year ago, Education Minister George Werner showed a great deal of political courage not just in launching this programme but in insisting that it be the subject of a ‘gold standard’ experimental evaluation. One year on, and these results show that his vision and conviction is beginning to pay dividends. This report is not the final word on PSL, but the next chapter promises to be even more exciting.

* Footnote: as the evaluators note in their report, the process of randomly assigning schools in summer 2016 was complex, made even more challenging by the huge number of moving pieces for both operators and the Government of Liberia as both endeavoured to meet incredibly tight timescales for opening schools on September 5th. Provisional school allocations changed several times; by August 14th, three weeks before school opening, we still did not know the identity of our fifth school and it was proving very difficult to find a pair of schools near enough to our other schools to be logistically viable. Faced with the choice of dragging the process out any longer and potentially imperilling operational performance or opting to run a fifth school that was not randomly assigned, we agreed with the Ministry on the latter course of action.

Expanding our network in Liberia

Photo credit: Kyle Weaver

We're proud to announce that the Ministry of Education has invited Rising Academies to significantly expand its school network in Liberia. From September 2017, Rising Academies will be operating 29 government schools across 7 counties.

The move comes as part of an expansion of the Partnership Schools for Liberia (PSL) program. To learn more about PSL, click here. The Ministry’s decision to award Rising more schools in the second year of PSL followed an in-depth review and screening process, including unannounced spot checks of PSL schools. Rising Academies was one of three providers to be awarded the top “A” rating for its strong performance in Year 1.

We're really proud of the progress our schools have made this year. If you want to learn more about how we've been rigorously tracking this progress and using data to inform our approach, check out our interim progress report here.

Our press release on the announcement of the Ministry's Year 2 plans is available here.

First year evaluation results show promise

Feedback is central to teaching and learning at Rising Academies. Students and teachers learn to give and receive feedback using techniques like Two Stars and a Wish or What Went Well...Even Better If. The Rising Academy Creed reminds us that "Our first draft is never our final draft." Given that, it would be pretty strange if Rising as an organisation didn't also embrace feedback on how well we are doing at enabling more children to access quality learning.

That's why, even as a new organisation, we've made rigorous, transparent monitoring and evaluation a priority from the outset. Internally, we've invested in our assessment systems and data. But my focus here is on external evaluation, because I'm excited to report that we have just received the first annual report from our external evaluators. If you want to understand the background to the study and our reactions to the first annual report, read on. If you're impatient and want to jump straight into the report itself, it's here.

Background

Last year, we commissioned a team led by Dr David Johnson from the Department of Education at Oxford University to conduct an independent impact evaluation of our schools in Sierra Leone.

The evaluation covers three academic years:

- (The abridged) School Year 2016 (January-July)

- School Year 2016-17 (September-July)

- School Year 2017-18 (September-July)

The evaluation will track a sample of Rising students over those three years, and compare their progress both to a comparison group of students drawn from other private schools and government schools.

The overall evaluation will be based on a range of outcome measures, including standardised tests of reading and maths, a measure of writing skills, and a mixed-methods analysis of students' academic self-confidence and other learning dispositions.

The evaluation is based on what is known as a 'quasi-experimental' design rather than a randomised controlled trial (unlike our schools in Liberia, where we are part of a much larger RCT). But by matching the schools (on things like geography, fee level, and primary school exam scores), randomly selecting students within schools, and collecting appropriate student-level control variables (such as family background and socio-economic status) the idea is that it will ultimately be possible to develop an estimate of our impact over these 3 years that is relatively free of selection bias.

Figure 1: How the evaluation defines impact

BASELINE

To make sure any estimate of learning gains is capturing the true impact of our schools, one of the most important control variables to capture is students' ability levels at baseline (i.e. at the start of the three-year evaluation period). This allows for an estimate of the 'value-added' by the student's school, controlling for differences in cognitive ability among students when they enrolled. Baselining for the evaluation took place in January and February 2016. The baseline report is available here. It showed:

- That on average both Rising students (the treatment group) and students in the other schools (the comparison group) began their junior secondary school careers with similar ability levels in reading and maths. The two groups were, in other words, well matched;

- That these averages were extremely low - for both reading and maths, approximately five grades below where they would be expected to be given students' chronological age.

YEAR ONE PROGRESS REPORT: RESULTS

The Year One Progress Report covers Academic Year 2016. The Ebola Crisis of 2014-15 disrupted the academic calendar in Sierra Leone. Students missed two full terms of schooling. The Government of Sierra Leone therefore introduced a temporary academic calendar, with the school year cut from three terms to two in 2015 (April-December) and again in 2016 (January-July). The normal (September-July) school year will resume in September 2016.

The Progress Report therefore covers a relatively short period - essentially 4.5 months from late January when baselining was undertaken to late June when the follow-up assessments took place. It would be unrealistic to see major impacts in such a short period, and any impacts that were identified would need to be followed-up over the next two academic years to ensure they were actually sustained. As the authors note, "it is a good principle to see annual progress reports as just that – reports that monitor progress and that treat gains as initial rather than conclusive. A more complete understanding of the extent to which learning in the Rising Academy Network has improved is to be gained towards the end of the study."

Nevertheless, this report represents an important check-in point and an opportunity for us to see whether things looking to be heading in the right direction.

Our reading of the Year One report is that, broadly speaking, they are. To summarise the key findings:

- The report finds that Rising students made statistically significant gains in both reading and maths, even in this short period. Average scaled scores rose 35 points in reading (from 196 to 231) and 36 points in maths (from 480 to 516). To put these numbers in context, this change in reading scores corresponds to 4 months' worth of progress (based on the UK student population on which these tests are normed) in 4.5 months of instruction.

- These gains were higher than for students in comparison schools. The differences were both statistically significant and practically important: in both reading and maths, Rising students gained more than twice as much as their peers in other private schools (35 points versus 13 points in reading, and 36 points versus 4 points in maths). Students in government schools made no discernible progress at all in either reading or maths. (For the more statistically inclined, this represents an effect size of 0.39 for reading and 0.38 for maths relative to government schools, or 0.23 for reading and 0.29 for maths relative to private schools, which is pretty good in such a short timespan.)

- The gains were also equitably distributed, in that the students who gained most were the students who started out lowest, and there were no significant differences between boys and girls.

- Finally, there are early indications that students' experience of school is quite different at Rising compared to other schools. Rising students were more likely to report spending time working together and supporting each others' learning, and more likely to report getting praise, feedback and help when they get stuck from their teachers.

That's the good news. What about the bad news? The most obvious point is that in absolute terms our students' reading and maths skills are still very low. They are starting from such a low base that one-off improvements in learning levels are not good enough. To catch-up, we need to sustain and accelerate these gains over the next few years.

That's why, for example, we've recently been partnering with Results for Development to prototype and test new ways to improve the literacy skills of our most struggling readers, including a peer-to-peer reading club.

So what are my two stars and a wish?

- My first star is that our students are making much more rapid progress in our schools than they did in their previous schools, or than that their peers are making in other schools they might have chosen to attend;

- My second star is that these gains are not concentrated in a single subset of higher ability students but widely and equitably shared across our intake;

- My wish is that we find ways to sustain these gains next year (particularly as we grow, with 5 new schools joining our network in September 2016) and accelerate them through innovations like our reading club. If we can do that, and with the benefit of 50% more instructional time (as the school year returns to its normal length), we can start to be more confident we are truly having the impact we're aiming for.

Take a look at the report yourself, and let us know what you think. Tweet me @pjskids or send me an email.